Autofac on Azure Functions.

Azure still keeps evoling a lot. The next new and hot thing is Azure Functions.

What makes Azure Function attractive?

In the past six months I did have a look onto Azure Functions more often, but was on one hand pretty busy with a move to a different company and on the other hand the static nature of every single Azure Function did raise some questions marks above my head. With the beta of Durable Functions, Azure Functions again got my attention. It makes some really time consuming implementation topics a very simple thing.

My new company evaluated Azure Service Fabric which offers a lot of possibilities but has one drawback: Integration in the infrastructure of Azure must be done on one’s own. In my experience this is not anything that is not doable, but it does costs a significant time to master infrastructural topics.

Here Azure functions really simplify greatly. Let’s have a short look onto how Azure Functions handle infrastructural topics before we dive into the topic that is actually given by the title of this article.

Infrastructure handling is greatly simplified by Azure Functions

Let’s say you want to send messages to Service Bus. To add some meat to the messages, the content of each and every will be a random superhero name. These messages shall be retrieved by another function and persist the content in a Azure Cosmos Db instance.

[FunctionName("SendServiceBusMessagePeriodicallyFunction")]

[return: ServiceBus("samplequeue", Connection = "ConnectionStringsServiceBus")]

public static Person Run([TimerTrigger("*/1 * * * * *")]TimerInfo myTimer, TraceWriter log)

{

log.Info($"C# Timer trigger function executed at: {DateTime.Now}");

var superhero = GetRandomSuperhero();

superhero.DateTime = DateTime.Now;

return superhero;

}Azure Functions shall be short by design. And Microsoft does some pretty smart things for it. The function itself can focus on the actual task: Creating the content of the message. For sending a message to a bus instance, only configuration and declarative work needs to be done.

[return: ServiceBus("samplequeue", Connection = "ConnectionStringsServiceBus")]This line specifies that the return value will be a message. This message is going to be sent to “SampleQueue” in the connection that is specified in local.settings.job file. The developer does not have to handle creation of the queue instance, handling timeouts, serializing messages or retry policies. Just focus on the content. Shortly the sample to store in CosmosDb:

[FunctionName("QueueTriggerCosmosDbPersistenceFunction")]

public static void Run(

[ServiceBusTrigger("samplequeue", AccessRights.Manage, Connection = "ConnectionStringsServiceBus")]SuperHero superhero,

[DocumentDB("SuperheroList", "Superheros", Id = "RowKey", ConnectionStringSetting = "SuperheroCosmosDB")] out dynamic storeSuperhero)

{

order.RowKey = Guid.NewGuid().ToString();

storeSuperhero = new {RowKey=Guid.NewGuid(), superhero.FirstName, superhero.LastName, superhero.DateTime};

}

Azure Functions allows for triggering a function in several ways, like HttpTrigger, TimeTrigger, Storage Queue, Blob and Table, Service Bus, CosmosDb and even more. Again the integration is done by attributes and consists mainly of the same information:

[ServiceBusTrigger("samplequeue", AccessRights.Manage, Connection = "ConnectionStringsServiceBus")]SuperHero superhero,The name of the queue, permission to access and the connection information. No handling of connections, retrieval. It is even not necessary to handle the message status after working with the content. The message will get completed after successful run of the function. Writing to CosmosDb is also very simple.

[DocumentDB("SuperheroList", "Superheros", Id = "RowKey", ConnectionStringSetting = "SuperheroCosmosDB")] out dynamic storeSuperhero)The information that needs to be provided is pretty comparable to ServiceBus. Not really surprising, it needs a connection and some specific information like the queue and documents list names. Again just focus on the content and return whatever needs to be persisted, Azure Function infrastructure will take care of it.

The drawback: the static nature of Azure Functions

Pretty amazing. Let’s move on to the actual topic of this article. Usually a certain piece of functionality in production will be larger than the few lines of code. It will rely on functionality that maybe shall be shared between functions, or just uses complex or just more logic. When you are used to the simplicity of dependency injection the static nature of Azure Functions can be considered as a great hurdle.

The solution: Azure Functions extensibility

Luckily, Azure Functions offers great extensibility. The same mechanism that provides the flexibility of the attributes shown before can be used to provide dependency injection. Starting from samples provided by Microsoft, freely available in GitHub, it is pretty easy to put together the necessary pieces. Have a look here onto the following resources for a startup:

Azure Webjobs SDK Extension: Provides sample how to integrate custom bindings and triggers

Azure WebJobs SDK: The complete sources of Azure Functions implementation.

The implementation

Starting with the extensions, let’s follow the way Azure Function infrastructure does use on its own: Using attributes to offer functionality.

using System;

using Microsoft.Azure.WebJobs.Description;

namespace AutofacOnFunctions.Services.Ioc

{

[AttributeUsage(AttributeTargets.Parameter)]

[Binding]

public class InjectAttribute : System.Attribute

{

public string Name { get; set; }

public bool HasName => !string.IsNullOrWhiteSpace(Name);

public InjectAttribute()

{

}

public InjectAttribute(string name)

{

Name = name;

}

}

}The first attribute tells the framework that it is going to be used in parameters of an Azure Function. The second tells the usage as a binding. There are several ways how to integrate this attribute. For dependency injection, it is necessary to know what the target type of the parameter will be. There may be bindings that are more simple where the target type is known in front. In that case, adding rules and using converters will be sufficient. For knowning the target type, there is the need to use IBinding and IBindingProvider.

using System.Reflection;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs.Host.Bindings;

using Microsoft.Azure.WebJobs.Host.Protocols;

namespace AutofacOnFunctions.Core.Services.Ioc

{

public class InjectAttributeBinding : IBinding

{

private readonly ParameterInfo _parameterInfo;

private readonly IObjectResolver _objectResolver;

public InjectAttributeBinding(ParameterInfo parameterInfo, IObjectResolver objectResolver)

{

_parameterInfo = parameterInfo;

_objectResolver = objectResolver;

}

public Task<IValueProvider> BindAsync(object value, ValueBindingContext context)

{

return Task.FromResult<IValueProvider>(new InjectAttributeValueProvider(_parameterInfo, _objectResolver));

}

public Task<IValueProvider> BindAsync(BindingContext context)

{

return Task.FromResult<IValueProvider>(new InjectAttributeValueProvider(_parameterInfo, _objectResolver));

}

public ParameterDescriptor ToParameterDescriptor()

{

return new ParameterDescriptor

{

Name = _parameterInfo.Name,

DisplayHints = new ParameterDisplayHints

{

Description = "Inject services",

DefaultValue = "Inject services",

Prompt = "Inject services"

}

};

}

public bool FromAttribute => true;

}

}Actually there are two points of interest being provided in constructor of the InjectAttributeBinding implementation. The first is the parameterInfo. Which that information it is possible to find out the target type that shall be resolved. The other is the ObjectResolver instance. This class is an abstraction to resolve components from AutoFac via a ServiceLocator.

using System;

using System.Reflection;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs.Host.Bindings;

namespace AutofacOnFunctions.Core.Services.Ioc

{

public class InjectAttributeBindingProvider : IBindingProvider

{

public Task<IBinding> TryCreateAsync(BindingProviderContext context)

{

if (context == null)

{

throw new ArgumentNullException(nameof(context));

}

var parameterInfo = context.Parameter;

var injectAttribute = parameterInfo.GetCustomAttribute<InjectAttribute>();

if (injectAttribute == null)

{

return Task.FromResult<IBinding>(null);

}

var objectResolver = ServiceLocator.Resolve<IObjectResolver>();

return Task.FromResult<IBinding>(new InjectAttributeBinding(parameterInfo, objectResolver));

}

}

}Having the Binding in place, the next interface to be implemented is the IBindingProvider. The implementation ensures that the context is available and that the context contains the attribute in question. When it is available, it gets an IObjectResolver instance from the ServiceLocator and passes it over to the Binding together with the ParameterInfo in question that is retrieved by the context.

The next necessary step is to make this available to the Azure Functions framework. Azure Functions itself will search for a certain provider when it recognizes an unknown attribute in a function’s constructor. It then searches for an IExtensionConfigProvider implementation that may can help to resolve the attribute in question.

using System;

using System.Collections.Generic;

using Autofac;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host.Config;

namespace AutofacOnFunctions.Core.Services.Ioc

{

public class InjectAttributeExtensionConfigProvider : IExtensionConfigProvider

{

public void Initialize(ExtensionConfigContext context)

{

if (context == null)

{

throw new ArgumentNullException("context");

}

InitializeServiceLocator(context);

context.Config.RegisterBindingExtensions(new InjectAttributeBindingProvider());

}

private static void InitializeServiceLocator(ExtensionConfigContext context)

{

var bootstrapperCollector = new BootstrapperCollector();

var bootstrappers = bootstrapperCollector.GetBootstrappers();

if (bootstrappers.Count == 0)

{

context.Trace.Warning(

"No bootstrapper instances had been recognized, injection will not function.");

}

var modules = new List<Module>();

foreach (var bootstrapper in bootstrappers)

{

var instance = (IBootstrapper) Activator.CreateInstance(bootstrapper);

modules.AddRange(instance.CreateModules());

}

InjectConfiguration.Initialize(modules.ToArray());

}

}

} This class is responsible for registering the InjectAttributeBindingProvider described before. Additionally this class is the perfect point in time to register all necessary services to AutoFac. How is that done? To be honest, it is kept very simple in this very first implementation. Let’s consider the reasons for the actual approach.

Things to be considered:

- Azure Functions Framework will call Azure Functions directly and autonomous. To not force the developer to find a place where he needs to configure the necessary services, the implementation will search for a certain implementation of an interface, following the architecture of Azure Functions.

- Autofac allows for different kinds of registration. To unify how registrations are done and to separate dependency injection services by topic, modules have been chosen. It should not be any issue to push different kinds of registrations.

For initialization of the Autofac this simple interface is available.

using Autofac;

namespace AutofacOnFunctions.Core.Services.Ioc

{

public interface IBootstrapper

{

Module[] CreateModules();

}

}You just need to create a class like this to initialize Autofac:

using Autofac;

using AutofacOnFunctions.Sample.Services.Modules;

using AutofacOnFunctions.Core.Services.Ioc;

namespace AutofacOnFunctions.Sample.Bootstrap

{

public class Bootstrapper : IBootstrapper

{

public Module[] CreateModules()

{

return new Module[]

{

new ServicesModule()

};

}

}

}The module itself just adds exactly one service:

using Autofac;

using AutofacOnFunctions.Sample.Services.Functions;

namespace AutofacOnFunctions.Sample.Services.Modules

{

public class ServicesModule : Module

{

protected override void Load(ContainerBuilder builder)

{

builder.RegisterType<TestIt>().As<ITestIt>();

}

}

}To use this dependency injection service just add the necessary services marked with an [Inject] attribute to your functions parameter list.

using System.Linq;

using System.Net;

using System.Net.Http;

using System.Threading.Tasks;

using AutofacOnFunctions.Core.Services.Ioc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.Azure.WebJobs.Host;

namespace AutofacOnFunctions.Sample.Services.Functions

{

public static class TestFunction1

{

[FunctionName("TestFunction1")]

public static async Task<HttpResponseMessage> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)]HttpRequestMessage req,

TraceWriter log,

[Inject] ITestIt testit)

{

log.Info("C# HTTP trigger function processed a request.");

return req.CreateResponse(HttpStatusCode.OK,$"Hello. Dependency injection sample returns '{testit.CallMe()}'");

}

}

}Putting the Inject attribute and the service you are looking forward should do the magic.

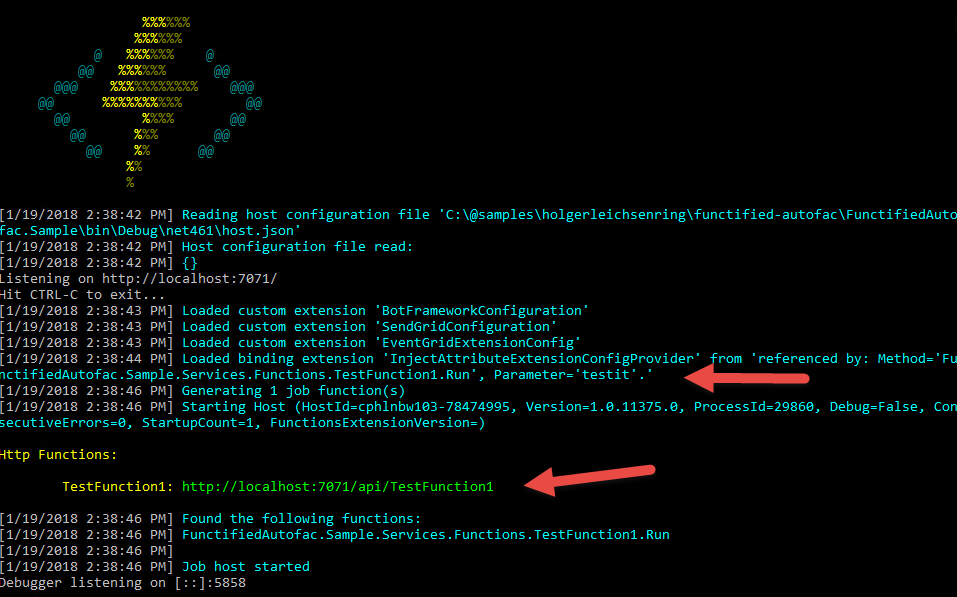

This is what happens when you start the solution, certainly having Visual Studio 2017 installed. That’s the first version that does support Azure Functions.

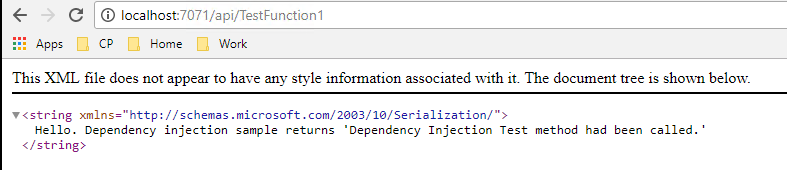

The first function that uses the Inject attribute will cause the framework to load up the extensions. The function provided by the sample is a simple http trigger function. Use the url above to do a short test of the functionality.

Copy the url and put it in the browser of your choice. You can see, the test method returns a string. Works!

Conclusion

Azure Functions’ static nature can be easily enhanced by the provided infrastructure of the framework. Let me just mention some different solutions out there:

- Using a different approach with initialization within the function: devkimchi

- Excellent sample using the same approach with Unity: wille-zone

Finally find all sources in this repository. Nuget package will follow as well as tests. Enjoy!

Update (2018-03-22)

Unluckily it seems to be not possible to generate a nuget package. Microsoft.NET.Sdk.Functions leads to a malfunction when trying to generate nuget packages with Visual Studio. This is a critical issue, as for packaging of libraries in larger Azure Functions, applications must directly reference assemblies instead of relying on packages. If this is solved, expect a nuget package.

Unable to generate nuget package when using nuget “Microsoft.NET.Sdk.Functions” in a library project

Hi Holger,

Its’s working now. I took your latest version.

Thank you for your help.

Now I am going to try this with v2.

Regards,

Senthilraj

Hi,

I am using AutofacOnFunctions V1 in my Azure function. Its working fine on debug mode, but after I published the function on Azure portal, I am getting the following error message

Event time

12/09/2018 18:37:24

Message

Unable to load one or more of the requested types. Retrieve the LoaderExceptions property for more information.

Exception type

System.Reflection.ReflectionTypeLoadException

Failed method

AutofacOnFunctions.Core.Services.Ioc.BootstrapperCollector+c.b__0_0

Can someone help me on this please

Hi Senthiraj,

you may want to try the newest version that I published this week. You would need to go on .net core, though. The .net framework version of AutofacOnFunctions will follow, just working on it. I will have a look onto your issue, thanks for the comprehensive description, this looks like issue #2 on github which I wasn’t able to reproduce up to now. Will try it again and let you know.

Best,

Holger

Thank you for your response.

I have figure it out when this issue is occurring.

it’s related to the automapper, whenever I tried to inject IMapper “[Inject]IMapper mapper” into the function, I am getting this error.

Can you check whether I am doing correctly,

[FunctionName(“ServiceBusMessageProcessor”)]

public static void Run([ServiceBusTrigger(“xxxxx”, “xxxx”, AccessRights.Listen, Connection = “serviceBusConnection”)]BrokeredMessage message, TraceWriter log, [Inject]IMapper mapper)

{

Report report = message.GetBody();

log.Info($”ServiceBus queue trigger function processed message: {report.CategoryId}”);

//var streetissue = mapper.Map(report);

}

———————————————–

public class Bootstrapper : IBootstrapper

{

public Module[] CreateModules()

{

return new Module[]

{

new ServicesModule()

};

}

}

————————————————

public class ServicesModule : Module

{

protected override void Load(ContainerBuilder builder)

{

builder.RegisterModule(new AutoMapperModule());

}

}

——————————————

public class AutoMapperModule : Module

{

protected override void Load(ContainerBuilder builder)

{

builder

.RegisterInstance(new MapperConfiguration(c =>

{

//Register Profiles

c.AddProfile();

}).CreateMapper())

.As()

.SingleInstance();

}

}

—————————————–

Hi Senthilraj,

I am going to set up a sample and give it a try. Did you try out doing the same with the latest version?

Best,

Holger

Hi Holger,

Thank you for sharing this. I have experienced a problem so I wanted to share here.

I started getting “System.Reflection.ReflectionTypeLoadException: Unable to load one or more of the requested types. Retrieve the LoaderExceptions property for more information.” exception. After some investigation I noticed that it is related to the Inject attribute, if I don’t use it everything is fine, if I use it function fails to start in Azure but again fine in my local environment. I can also swear I saw the function sometimes ran without any issues in Azure as well, but I can’t reproduce this 🙂

There is no global exception handling so it took some time but I have managed to find the related loader exception.

Could not load file or assembly ‘System.Runtime.Loader, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a’ or one of its dependencies. The system cannot find the file specified.

This was not something I was expecting. After a long investigation I made the code work. The exception happens in BootstrapperCollector, in GetBootstrappers method.

This is the line throws that exception. But this is not a bug in code.

AppDomain.CurrentDomain.GetAssemblies().SelectMany(x => x.GetTypes()).Where(x => typeof(IBootstrapper).IsAssignableFrom(x) && !x.IsInterface && !x.IsAbstract).ToList();

After a lot of trial and error, I have found out that somehow Microsoft.IdentityModel.* assemblies were causing the problem. I don’t know why or how they got referenced, but if I update the line as follows I got no exceptions. Why this happens only in Azure, I also have no idea.

AppDomain.CurrentDomain.GetAssemblies()

.Where(a=> !a.FullName.Contains(“Microsoft.IdentityModel”))

.SelectMany(x => x.GetTypes())

.Where(x => typeof(IBootstrapper).IsAssignableFrom(x) && !x.IsInterface && !x.IsAbstract).ToList();

I hope this helps others.

can

Hi Can,

many thanks for the comprehensive analysis!

I currently have no idea why loading and scanning the identitymodel assemblies should lead to a loader exception. I didn’t experience anything like this up to now. Anyway I am going to update the code with your changes and have a look onto it later to in best case digg up the root cause of this behavior. I’ll let you know if I could bring it to light.

thanks again!

Hi Holger an update,

After adding new functions we had the same issue, so basically now I am filtering all System and Microsoft assemblies. So it wasn’t just the identitymodel, which also did not make sense like you have said.

can

Hi Can,

thanks for keeping me updated! I couldn’t reproduce the behavior. Could you give me a little bit more of information what kind of libs you use?

Holger

Hi Holger,

I will try to reproduce this in a simple project and try to share with you, though this may take some time 🙁

can

Hi Can,

sounds perfect!

Holger

Great post! With your code, it is easy to add the Inject attribute in the function method call and register the class in the ServicesModule – and the dependency injection works magically.

I did try extending your sample to add logging using Autofac.Extras.DynamicProxy to inject the logging outside of the TestIt class.

e.g. builder.RegisterType().As().EnableClassInterceptors();

However, that was not successful. Do you know if this is possible in Azure Functions at present? Thanks!

Hi Adrian,

thanks. 🙂

I did try it by myself today. I do get an exception:

Exception thrown: ‘System.MissingMethodException’ in Autofac.dll

An exception of type ‘System.MissingMethodException’ occurred in Autofac.dll but was not handled in user code

Method not found: ‘Autofac.Builder.IRegistrationBuilder`3 Autofac.Extras.DynamicProxy.RegistrationExtensions.EnableClassInterceptors(Autofac.Builder.IRegistrationBuilder`3)’.

References are all there, it compiles fine. I guess it has something to do with Azure Functions itself, due to there is no issue using Autofac AOP based on Windsor Castle within .net core or .net framework.

I’ll have another look and let you know if I can make it running.

Thanks! I came back to this after being away and I see that https://blog.mexia.com.au/dependency-injections-on-azure-functions-v2

does indicate that Azure Functions V2 still has some issues. Per that article “If you want to use the IoC container from the 3rd-party library, stay on V1. If you want to use the IoC container provided by ASP.NET Core, use V2”

Hi Adrian,

thanks for keeping me updated! I did not try the V2 variant yet. I’ll have a look onto it and push a new article/ new code. Actually to be honest I do like to abstract away the DI framework of asp.net core for the same reasons I would abstract away the LogManager of log4net. Actually the flexibility to change a lib behind the scenes probably is worth the price for adding additional complexity. (I added a comment to the article sharing my thoughts on this)

Anyway, I am going to have a look and share my experience with that!

Hi Adrian,

I checked how Azure Functions V2 does work, and actually there are not many differences. Good news is, that nuget packaging is now possible. Have a look onto this post http://codingsoul.de/2018/06/12/azure-functions-dependency-injection-autofac-on-functions-nuget-package/.

Thank you for brilliant article. Is it possible to have per request like semantic with your solution (one db context per azure function call) and did you have a chance to investigate System.MissingMethodException? I see that azure func emulator loads autofac.dll twice, first time for his internal purposes (pretty old one) and second one is from client code.

Hi Alex,

currently I didn’t implement a way of recognizing if a certain service is resolved for a certain instance of a function. But that is a valid and sensible idea. I guess you want to avoid to create a connection to a database every time, but have a certain context per function? You may can solve that issue by creating at least three services:

1. A service that creates a connection (singleton)

2. A service that manages the connection (singleton)

3. A db context service that consumes the manager (transient)

Would that be feasible? The Autofac container is loaded exactly once for all functions. Actually Azure Functions does that exactly in that way with all attributes.

I didn’t have any time to double check the issue with the MissingMethodException, but I’ve seen your stack overflow question. Looks like, there are not too many people out there handling this very issue :-). From my point of view, it looks like, Azure Function doesn’t allow for any dynamic proxying, but I do not have the proof already. I may give it a try in Azure Functions V2. As it is now possible to create nuget packages without any hurdles with it, I am quite optimistic that something changed behind the scenes. Will try it out soon and let you know.

Just PERFECT!

Thanks! Unluckily at the moment there is no sensible way of providing this as a nuget package. Waiting for MS 😉